Securely Executing LLM Generated Code, for Agentic Systems and Otherwise

It’s not safe to let the LLM run code and use tools in the same environment where your application is running. Isolation of executed code to prevent unauthorized access to system resources or user data. - https://e2b.dev

One of the things that Generative AI is good at is generating code. We see this in use all the time in tools like ChatGPT and Claude, where the LLMs generate code that is sometimes executed within the “chat” tool itself. For example, in Claude there is the concept of “artifacts”, which are files created by the LLM, which in some situations can even be run by the LLM provider, and the results shown to the user. However, the code generated by LLMs is untrusted. We don’t know exactly what the code will do because it was created by an AI model, not a human. (Though of course, human code isn’t that easy to predict either!)

Expanding the Capabilities of LLMs

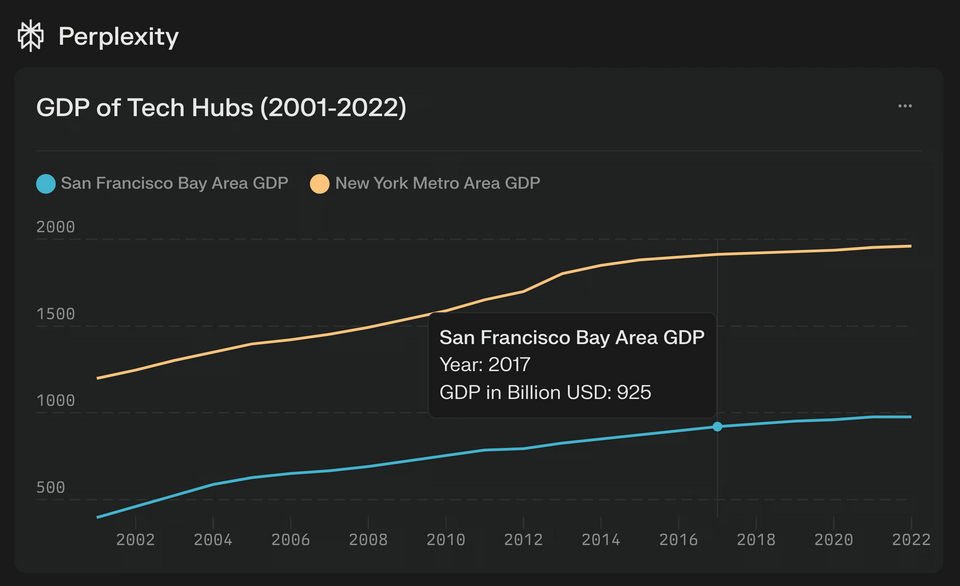

Above, a chart generated by Perplexity’s LLM

Chatting with an LLM is one thing: you enter text and get text back. But what if we want more than text, like a graph, or some kind of output that requires the use of code to generate? Because LLMs can generate code, at least at this point short snippets of code, they can do almost anything…not just return text. So if a company wants to extend the capabilities of their LLM product to provide market differentiation, they need to find a way to run that code. But, again, it is untrusted code, and so it needs to be secured and it needs to be contained.

Agentic Systems

On a related note, it’s quite likely that the real power of LLMs and GenAI is going to be in agentic systems, where it’s not just a human interacting with a chatbot-fronted LLM, building “artifacts” somewhat awkwardly, but rather a system that has many LLM “agents” working together behind the scenes to accomplish a complicated task. These agents will need to execute code to do their work. So not only do we need to run LLM-generated code in an advanced chat-like product environment, but we also need to use these agentic systems and provide them the ability to run code. Somewhere.

E2B and Perplexity

Many times when people are asking Perplexity questions, there is an underlying need to run code. If I’m looking at a stock price, I want to see a chart with its price trends. When searching for election data, it’s helpful to see a breakdown of votes for different candidates. It’s fairly easy for the models to generate the code today, but when you are serving millions of users like Perplexity is, there are real infrastructure challenges to supporting code interpreter capabilities… - https://e2b.dev

In order for Perplexity to build specialized features into their LLM product, they needed to find a way to execute the code they generate. They chose E2B.

From E2B’s blog, in which they describe Perplexity’s use of E2B:

- “Security and isolation: E2B’s use of Firecrackers by AWS for creating isolated environments is a secure way to run LLM-generated code.”

- “Ease of use: E2B comes with a built-in Python and Javascript SDK for easy development”

- “Stateful, highly customizable code execution: E2B sandboxes are able to do almost anything you want them to do. They support stateful code execution, are super performant, and come with highly adjustable settings that you can tune to suit your needs.”

E2B

E2B has built a solution to sandbox LLM generated code. In the end, the code is executed in a small, fast virtual machine (yes, virtual machine!) provided by a technology called Firecracker, which is also used by AWS for things like AWS Lambda.

The E2B Sandbox is a small isolated VM the can be started very quickly (~150ms). You can think of it as a small computer for the AI model. You can run many sandboxes at once. Typically, you run separate sandbox for each LLM, user, or AI agent session in your app. For example, if you were building an AI data analysis chatbot, you would start the sandbox for every user session. - https://e2b.dev/docs

E2B has quite a bit of open source code, which can be found in their Github account:

As well, they allow anyone to sign up for an account and try it out.

Firecracker

While Kubernetes and Docker have been all the rage in the application deployment world, containers don’t necessarily contain. Containers are usually–not always, but usually–a form of process isolation, and that process isolation is not as strong as a full virtual machine. Certainly we have made great strides in process isolation, such as seccomp, apparmor, Linux namespaces, etc., etc., but virtual machines are hard to beat.

…enables you to deploy workloads in lightweight virtual machines, called microVMs, which provide enhanced security and workload isolation over traditional VMs, while enabling the speed and resource efficiency of containers. Firecracker was developed at Amazon Web Services to improve the customer experience of services like AWS Lambda and AWS Fargate. - https://firecracker-microvm.github.io/

It should be noted that there is a lot of code that has to be wrapped around the LLM and on top of Firecracker to make it all work together. But clearly, small, fast virtual machines are the way to go for highly isolated, high performance code execution. That said, Firecracker and similar technologies aren’t a silver bullet; they don’t solve every single security problem.

Next Up…

In future posts, we’ll explore the use of tools and systems like E2B to improve the security of applications and agent-based systems that use LLM-generated code.