Model Context Protocol Security

At the March 2025 TAICO meetup, I went over the Model Context Protocol (MCP) in general, and then looked at the security implications of the protocol.

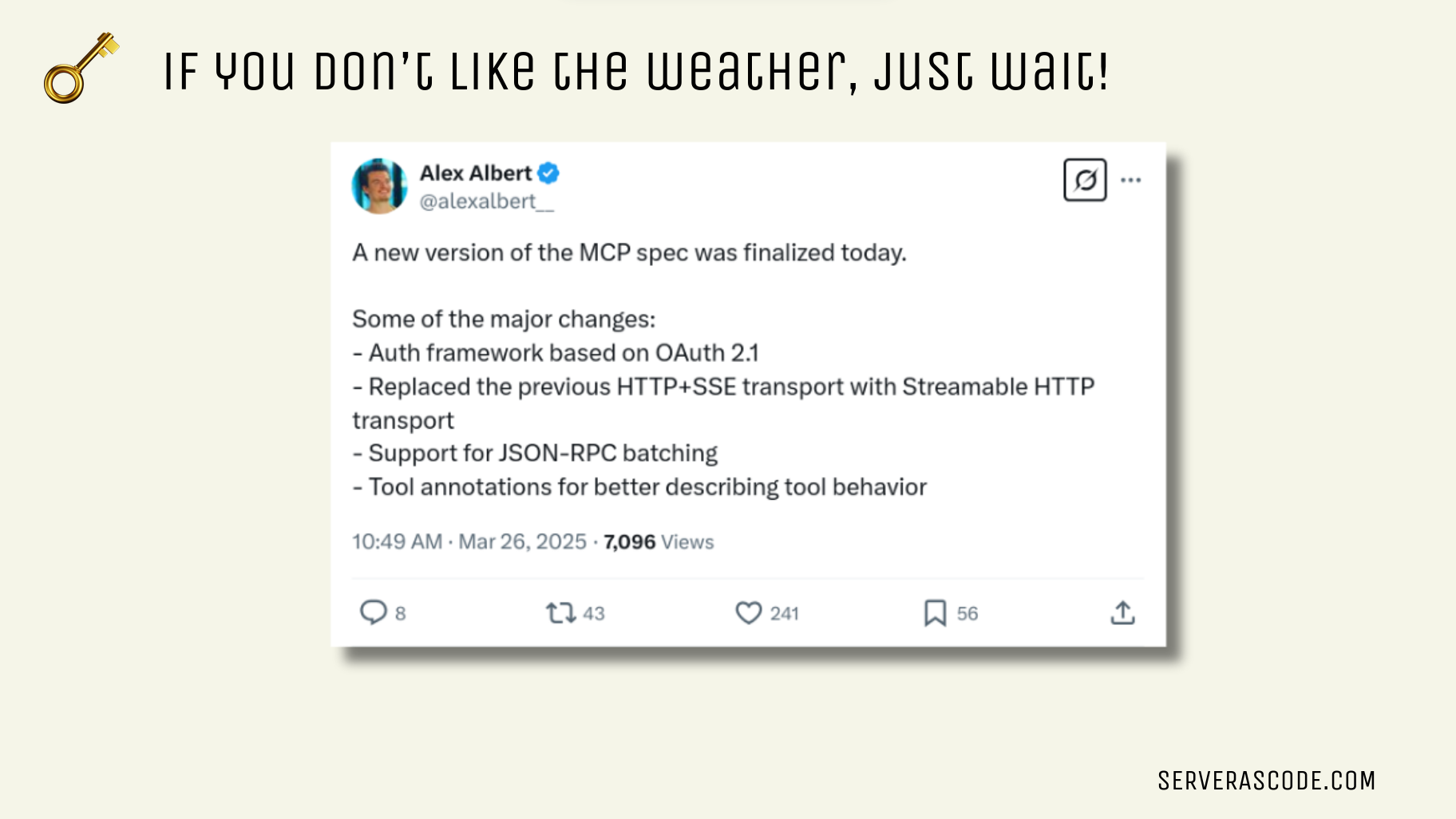

Now, it’s important to remember that MCP is new. Very new. And it will change. In fact, if you are reading this post some time after March 2025, the protocol will probably have changed, and changed for the better.

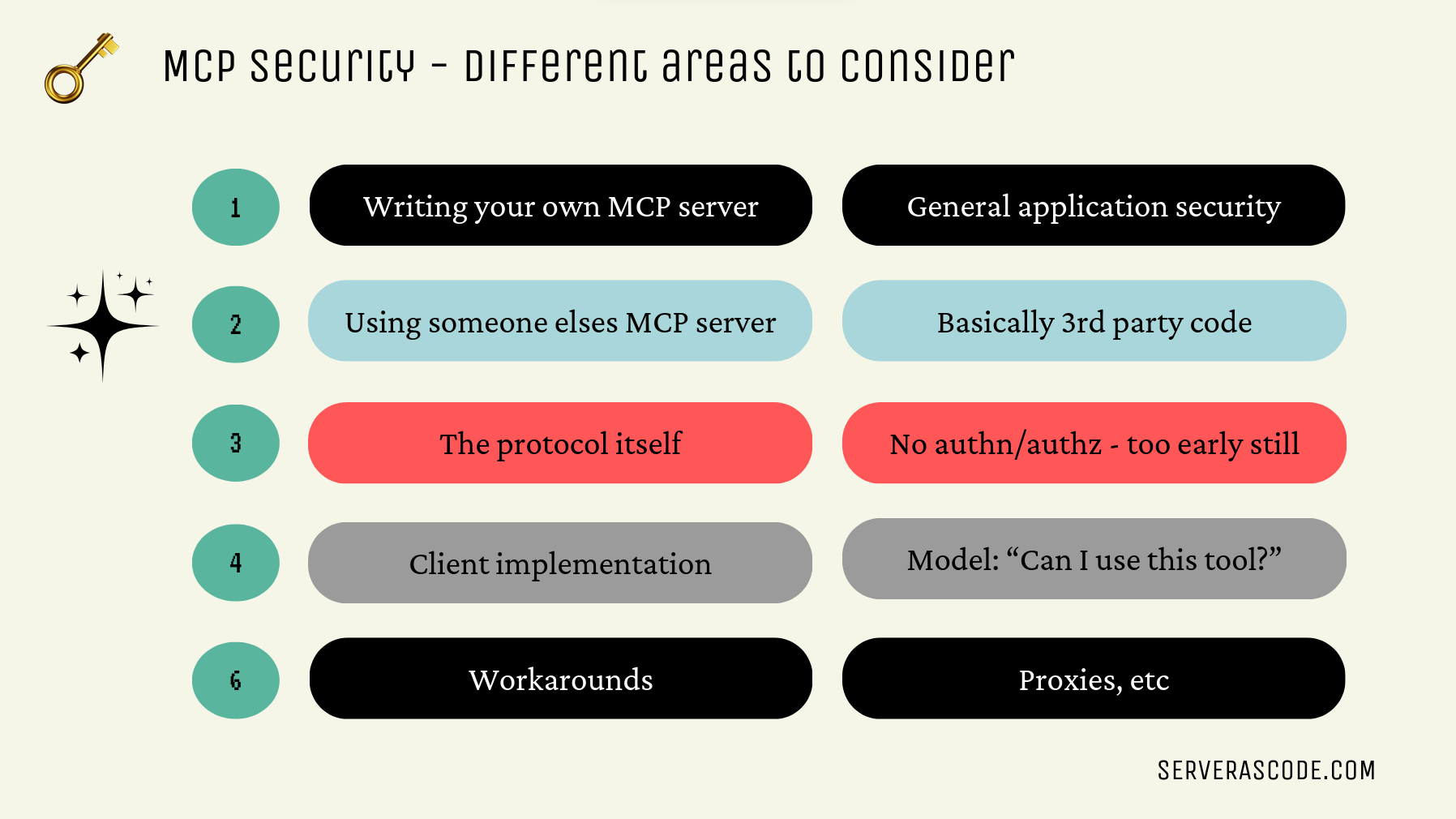

Security Areas

There are quite a few security areas to consider when using MCP. Here’s a non-exhaustive list:

Most of the ones we can think of are:

Most of the ones we can think of are:

- Using someone else’s MCP plugin

- The security of the protocol itself, e.g. authentication and authorization

For this post, I’ll focus on the first.

Using someone else’s MCP plugin

In simple terms, MCP is a framework for creating plugins. These plugins can provide tools, resources and prompts for an LLM. MCP makes it really easy to create and use plugins, and just as easy for the MCP client to consume them.

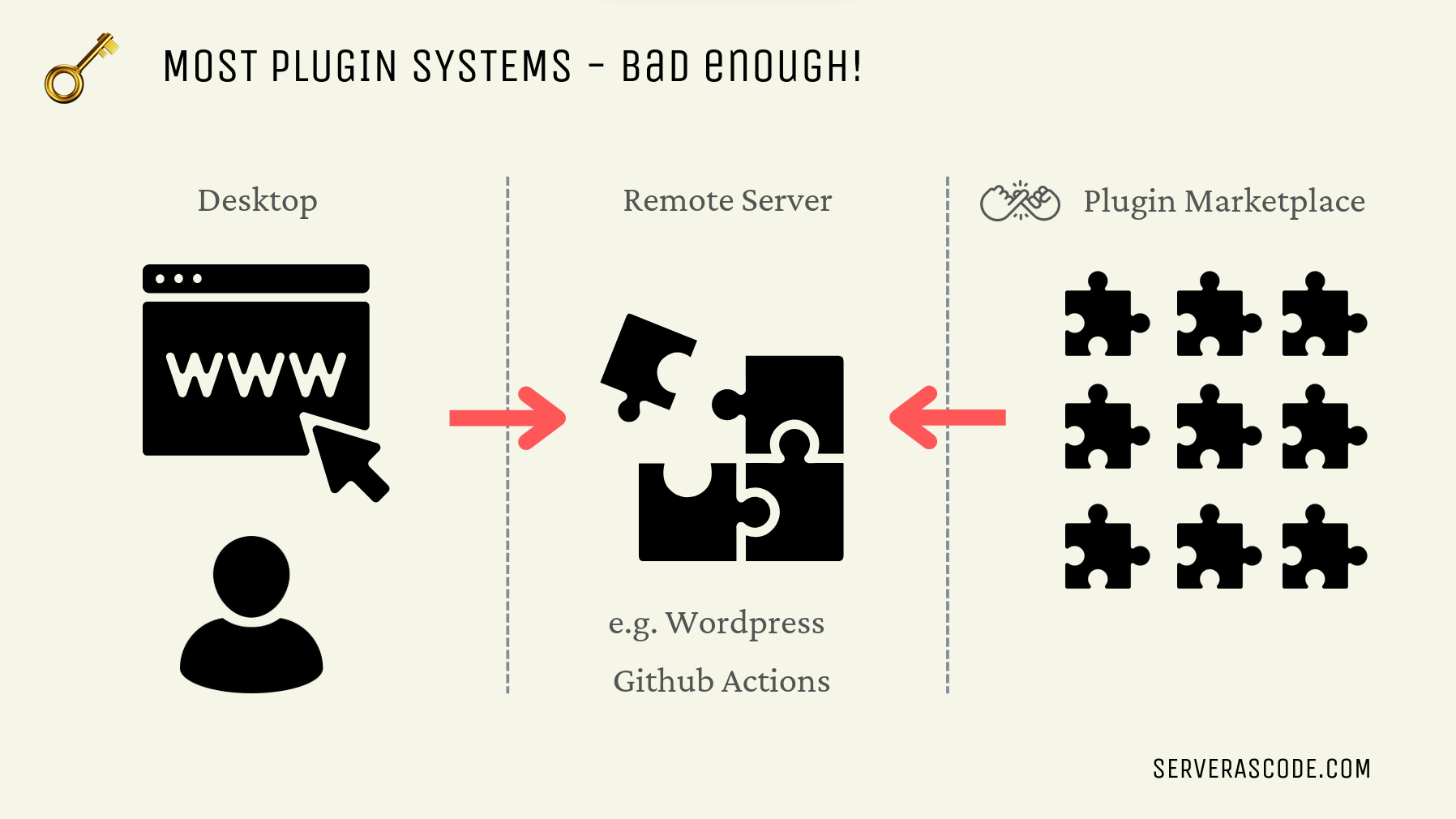

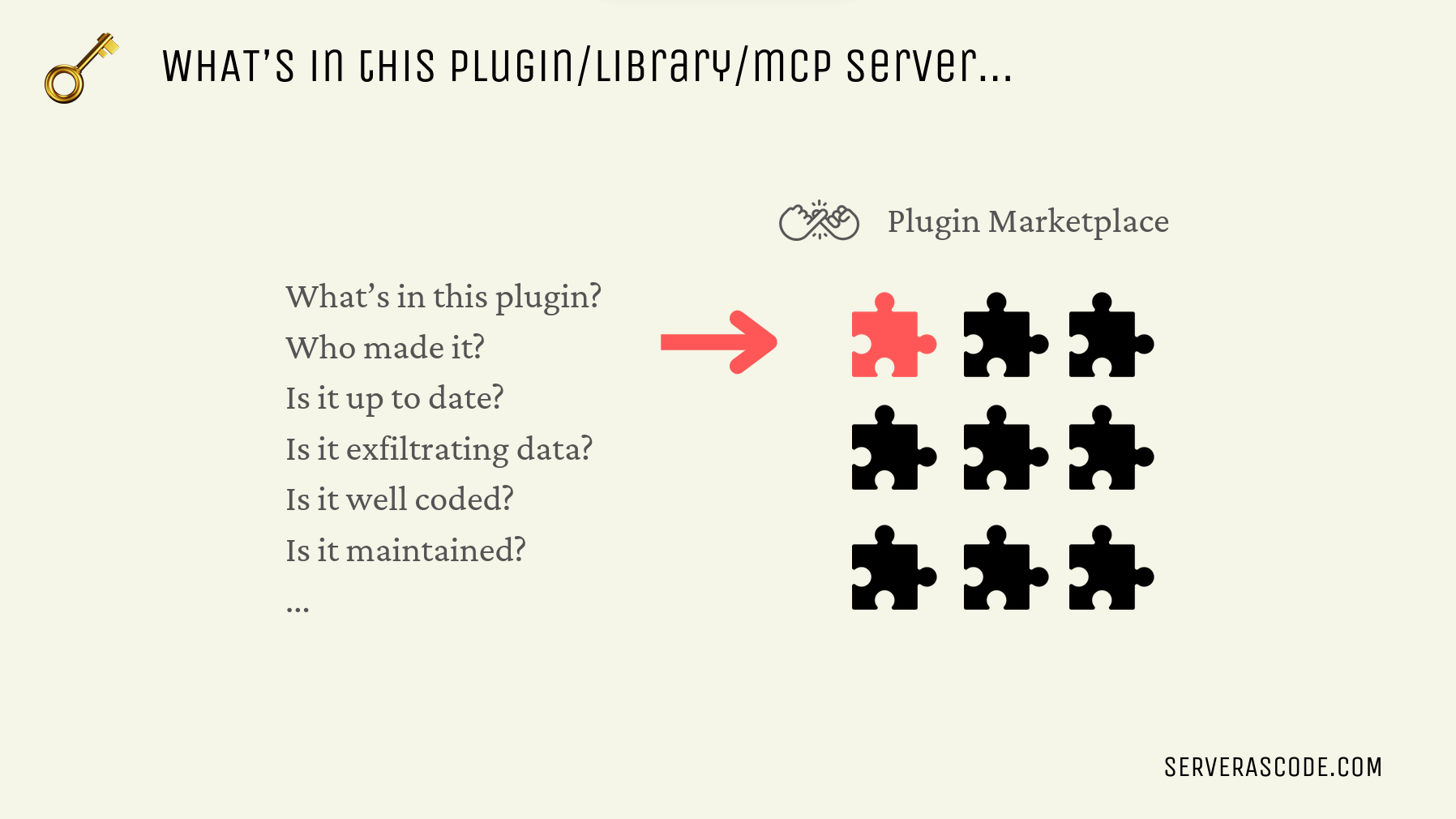

BUT, plugins are just borrowed code, like any other plugin model, e.g. Wordpress plugins (notorious for security problems) or Github actions (which have had their own security problems recently).

Any time we borrow code, we have to consider the security implications of that code, we have to audit it in some fashion, we have to verify it, and we have to trust it to some extent. MCP is no different, and it’s no easier to secure.

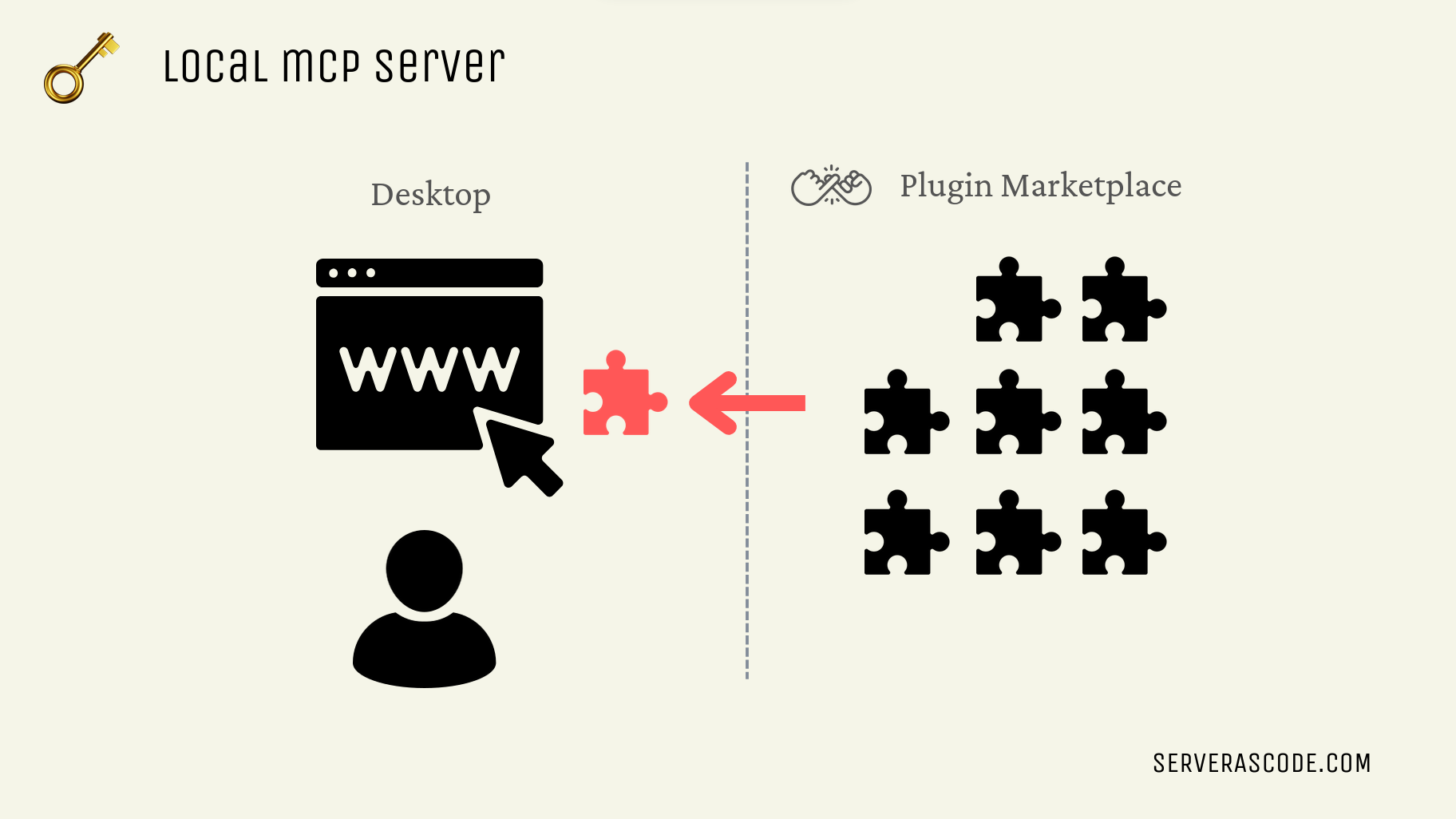

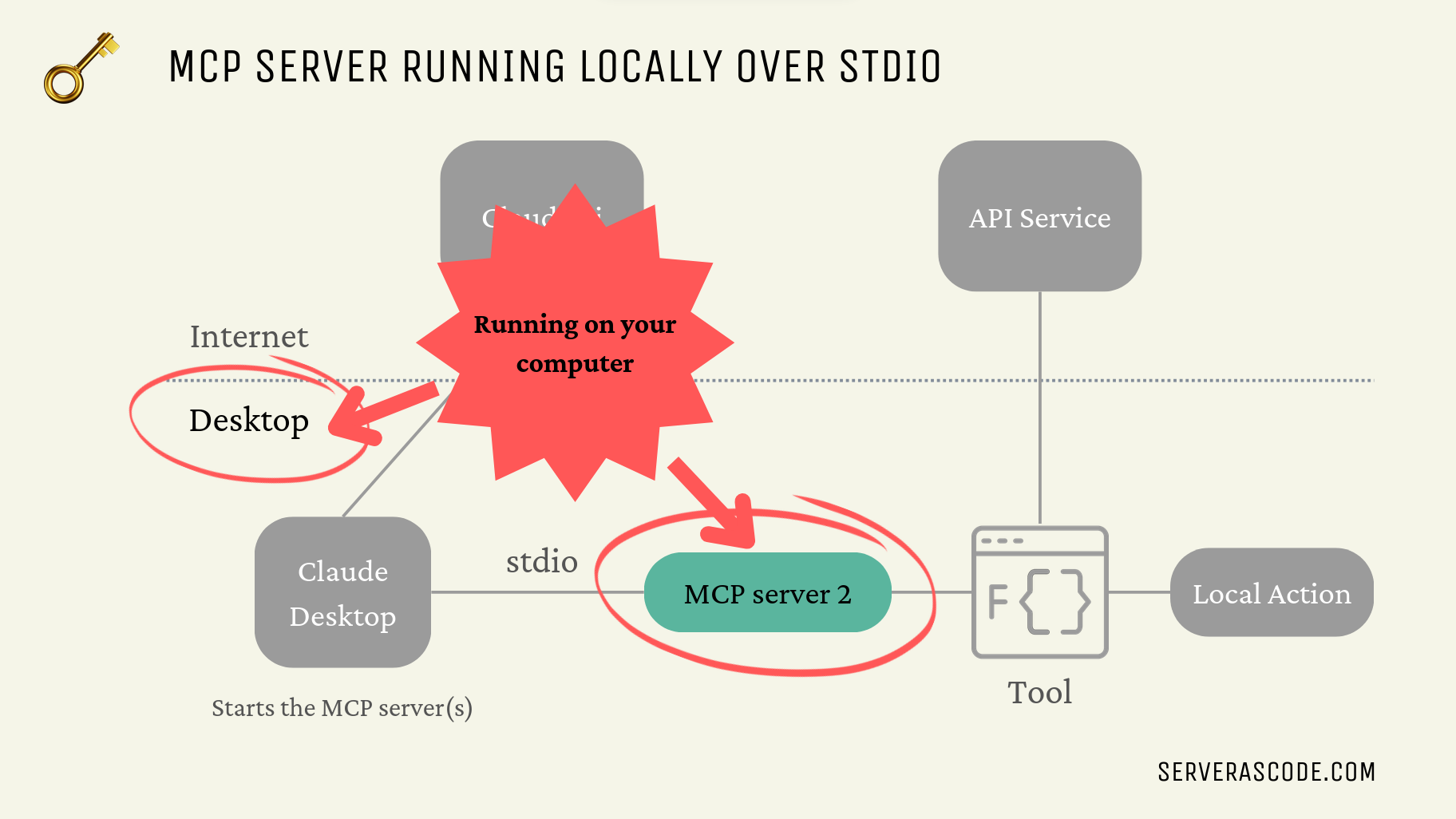

A key difference, however, is that at least at the moment, and most often, you can run MCP plugins LOCALLY on your own computer, or wherever the “agent” or “AI application” is running. This is a big difference to Wordpress or Github actions where the code runs on a remote server.

Sure, that remote server has value (environment variables, etc), but your local computer has a lot of personal value, i.e. maybe it’s where you do your banking, or where you keep your family photos, etc.

In this example we’re using Claude Desktop which has support for MCP plugins, and in fact through the configuration it starts the local MCP servers for us, either as local processes or as docker containers (where, I suppose if you have to run the MCP server locally, then on Windows running in Docker is a bit safer because the MCP server will run in a container that is INSIDE a WSL2 Linux VM, and so it’s not directly exposed to the Windows operating system). So it is all running locally.

Ultimately, plugins are something we need to gain trust in, and that trust has to be earned.

Will Running Local MCP Servers Continue?

In my opinion, I don’t expect most of us to consume “LLM plugins” by running them locally on our own laptops/desktops. It’s just too risky, and probably doesn’t make much sense unless you need the plugin to actually change your local environment, i.e. write files, rename them, etc.

It might make sense in a server environment, and indeed for agents to be useful they need to be able to affect the local environment, but the use cases for local desktops are quite limited and will probably only be used in special cases, file management again being a good example. But we’d really need to trust this particular plugin and the code behind it, perhaps to the extent that it only comes from the OS vendor itself.

Improvements Are On The Way

The MCP team is already working on improvements to the protocol, as one would expect with any new protocol.

Conclusion

At a high level, MCP servers are plugins, and those plugins are borrowed code. That said, we probably won’t be running many of them locally in the future, and that’s a good thing.

As the protocol matures, the more interesting security aspects will be in how plugins get access to services and data, which for now will be via OAuth.

Authentication & Authorization: Adding standardized auth capabilities, particularly focused on OAuth 2.0 support. - MCP Roadmap

In future posts we’ll explore more about MCP security, especially as it becomes more clear as to how the protocol will evolve.